Abstract

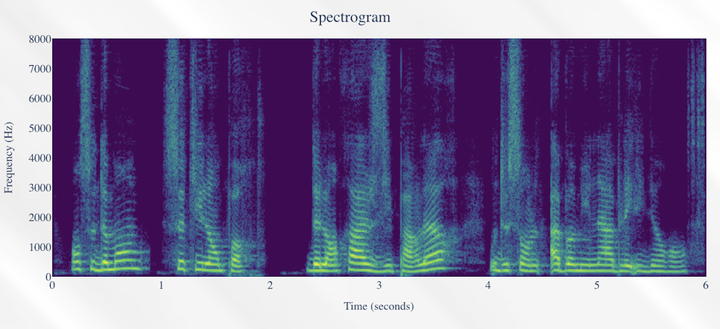

This research project was born from a collaboration between the LMSSC team (Laboratory of Mechanics of Structures and Coupled Systems) of the CNAM and the APC team (Acoustics and Protection of the Combatant) of the ISL. Its objective is to improve the intelligibility of speech captured by an in-ear microphone developed by the ISL. This unconventional pick-up device, coupled with active hearing protection, allows to pick up the vocal signals emitted by a speaker by eliminating all external noise. However, the acoustic path between the mouth and the transducers is responsible for a total loss of information above 2 kHz. At low frequencies, a slight amplification as well as physiological noises are observed. We are therefore faced with a problem of reconstruction of a signal absent at high frequencies and denoising. Deep learning methods will be used for the reconstruction of high frequencies instead of the source-filter model which is not able to restore missing information. A first phase of analysis of the captured signals is necessary to model the degradation and observe its variability. The design of a consequent database is then made possible with a digital filtering simulating the observed deteriorations. In order to increase the richness of this database and to avoid any over-learning phenomenon, a random component will be introduced in the filtering. The design of deep neural networks is now possible for the regeneration of the emitted signal from the degraded signal. A broad exploration on the architecture of the networks, the cost functions used and the learning strategies will be undertaken. The final objective is to integrate an inference network on a programming board for real-time processing. Particular attention will be paid to the size of the network and the processing time on this type of lightweight and low power consuming architecture.

Slides :