Quiet Drones 2022 - Paris

27/06/2022

Éric Bavu(1), Hadrien Pujol(1), Alexandre Garcia(1), Christophe Langrenne(1),

Sébastien Hengy(2), Oussama Rassy(2),

Nicolas Thome(3), Yannis Karmim(3),

Stéphane Schertzer(2), Alexis Matwyschuk(2)

A deep-learning-based multimodal approach for aerial drone detection and localization

Project partners

Funding

protection against the illicit use of aerial drones

network of audio and video sensors

Microphone arrays

optronic system

Localization and detection

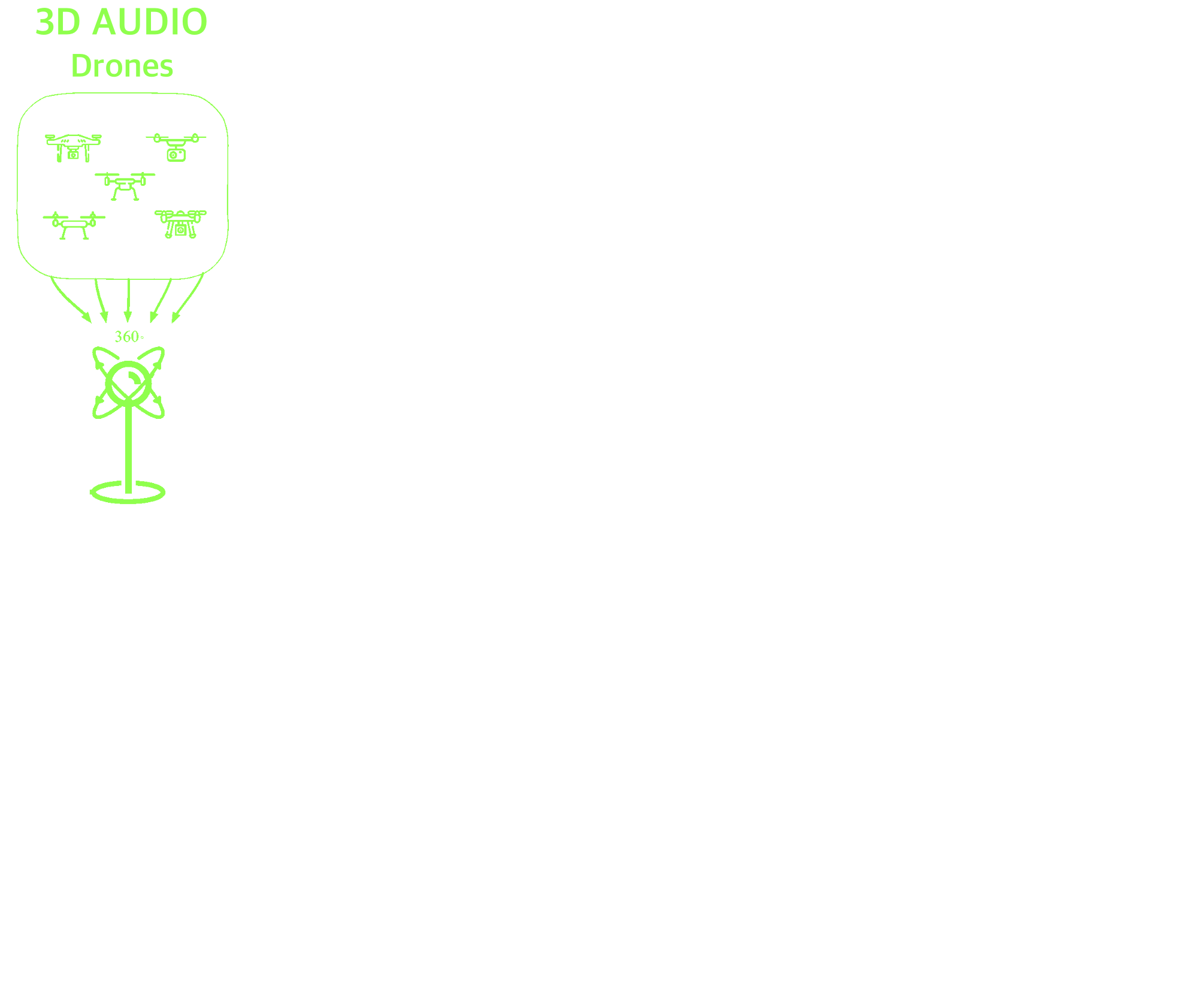

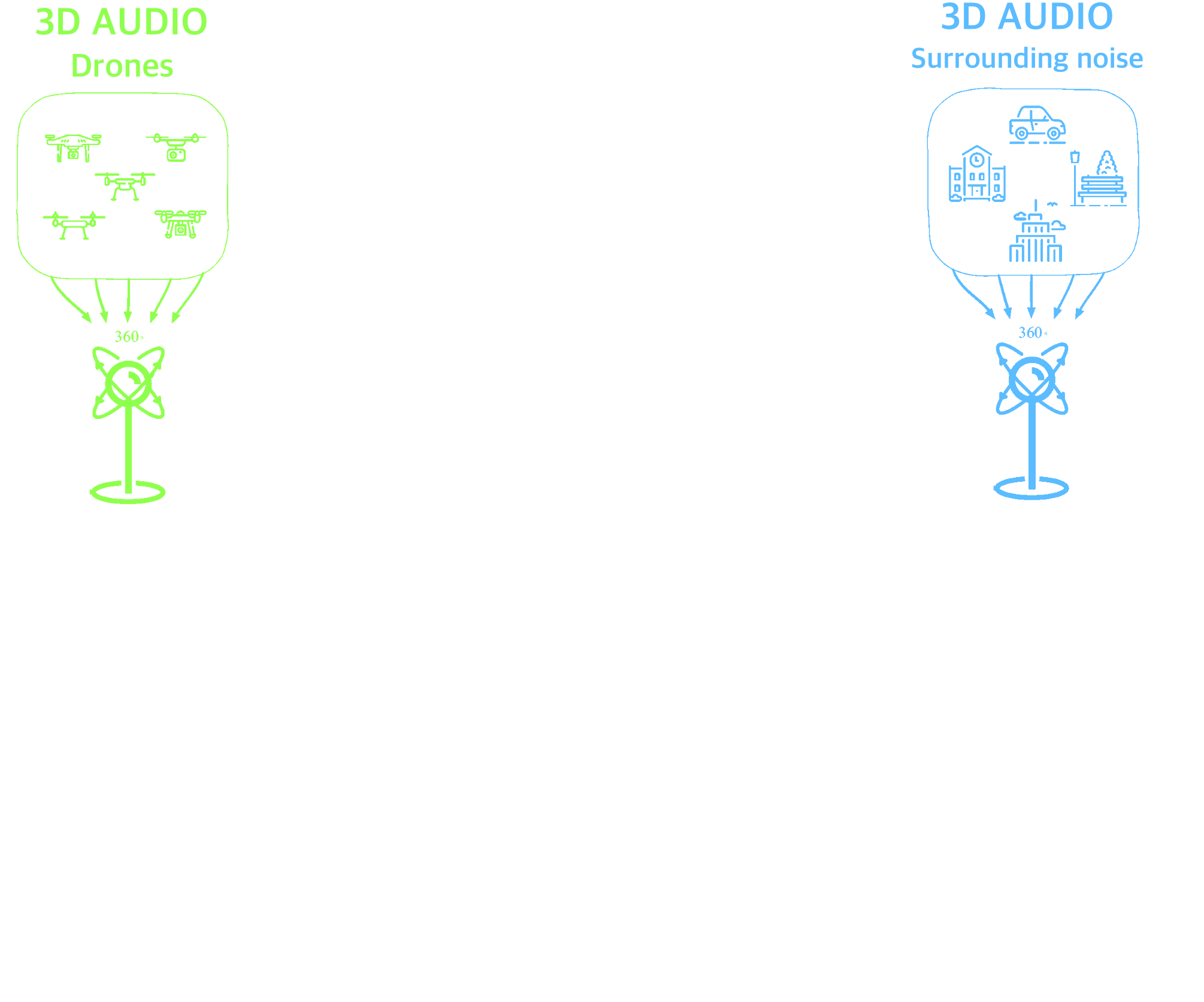

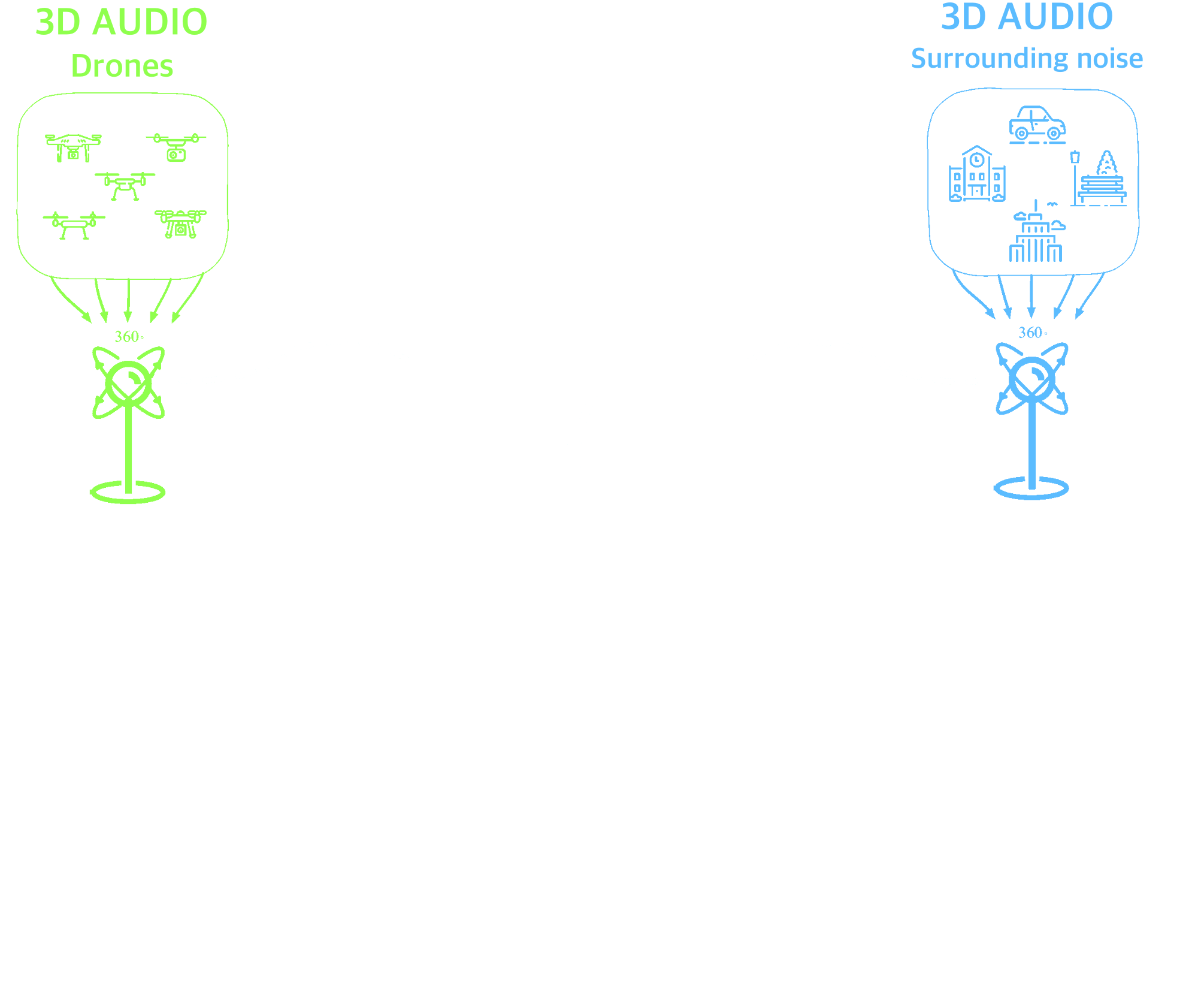

3D Audio

audio/video

Deep Learning

Automatic video tracking

Modular network of sensors

Installation examples

independent and compact deep-learning processors

AI-enhanced microphone array

Real-time drone localization

using Deep Learning audio

- ≈ 15 hectares / array

- 40 estim. / sec / array

- Latency < 20 ms

Absolute 3D angular error < 4°

Simultaneous recognition of the drone model

- Acoustic signature recognition

- multitask A.I. (localization + recognition)

- Rate : 40 estim./ sec / array

Detection rate > 95% / Recognition rate > 85%

Innovative A.I. training using 3D ambisonics spatialization

Innovative A.I. training using 3D ambisonics spatialization

Innovative A.I. training using 3D ambisonics spatialization

Innovative A.I. training using 3D ambisonics spatialization

Innovative A.I. training using 3D ambisonics spatialization

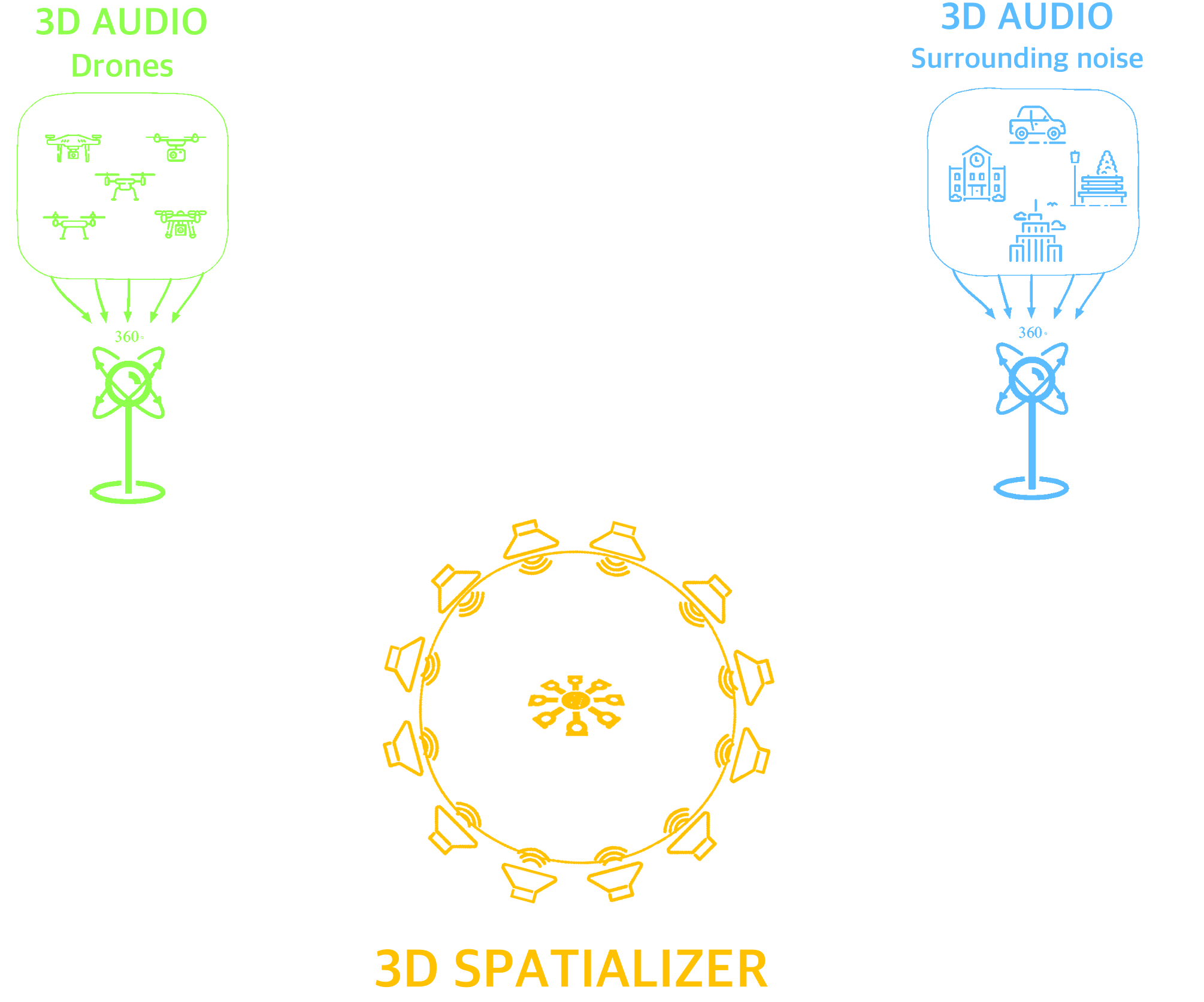

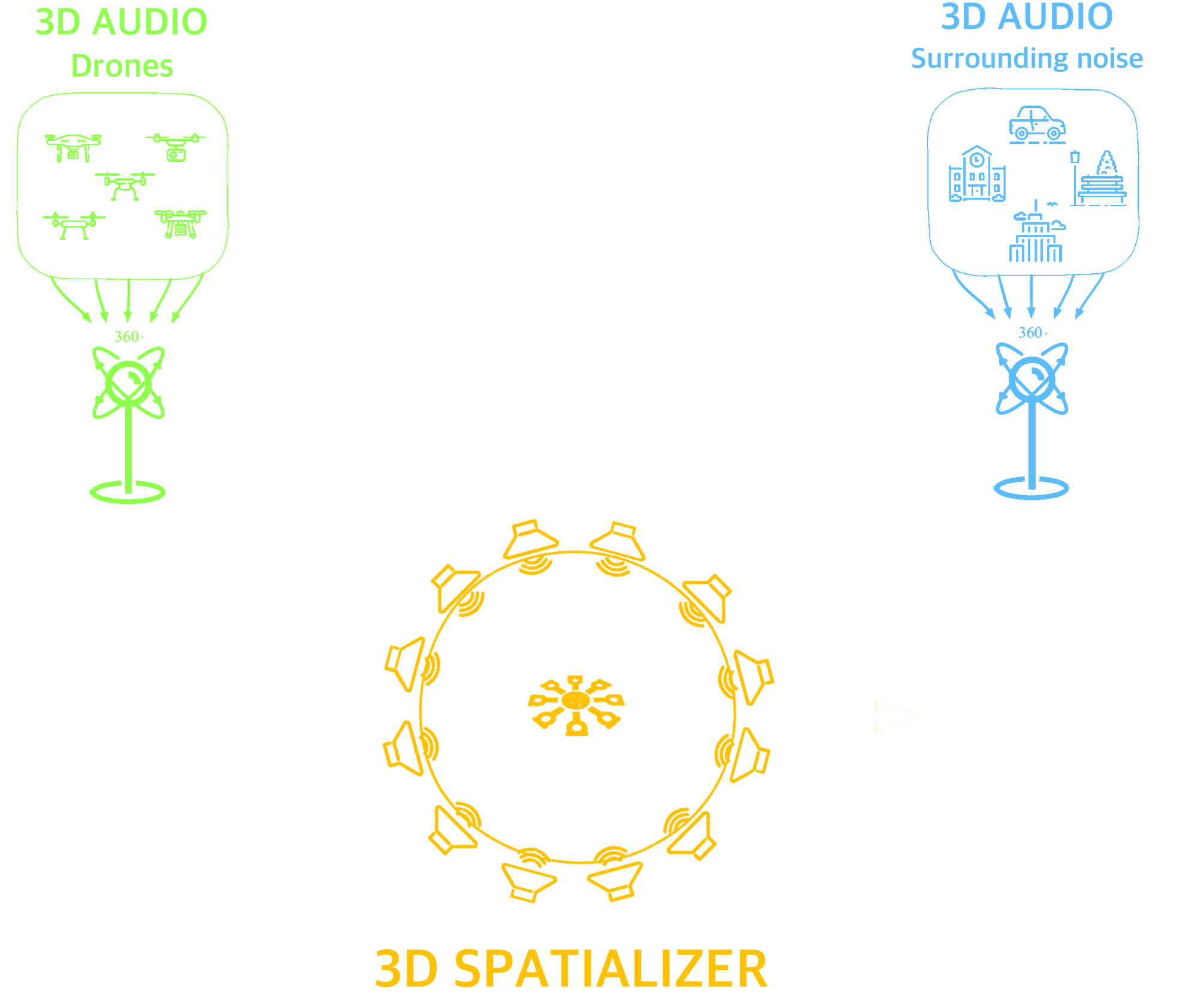

3D Spatializer

- Restitution and modification of 3D drone trajectories

- Rotation of audio scenes

- Real time soundfield synthesis

- Automatic labeling

- Simplified inclusion of new drones to the dataset

Ambisonic synthesis process

Signal :

- Several arrays on sites

- 19 or 50 microphones / array

- > 55 h of recordings time (800 Go)

- Samplerate: 48000 Hz

Ambisonic synthesis process

Position :

- GPS RTK for drone g.t. positions

- Orientation and position

- Samplerate > 5 Hz

Ambisonic synthesis process

Spatialization :

- Calibrated Ambisonics system

- Order 5 ambisonics

- Real time control

- Automated recording

Deep neural network for audio

localization and recognition : BeamLearning-ID

Localization results : azimuth - Testing flight

Localization results : elevation - Testing flight

Absolute 3D angular error - Testing flight

Localization performances : statistics

Drone recognition performances : statistics

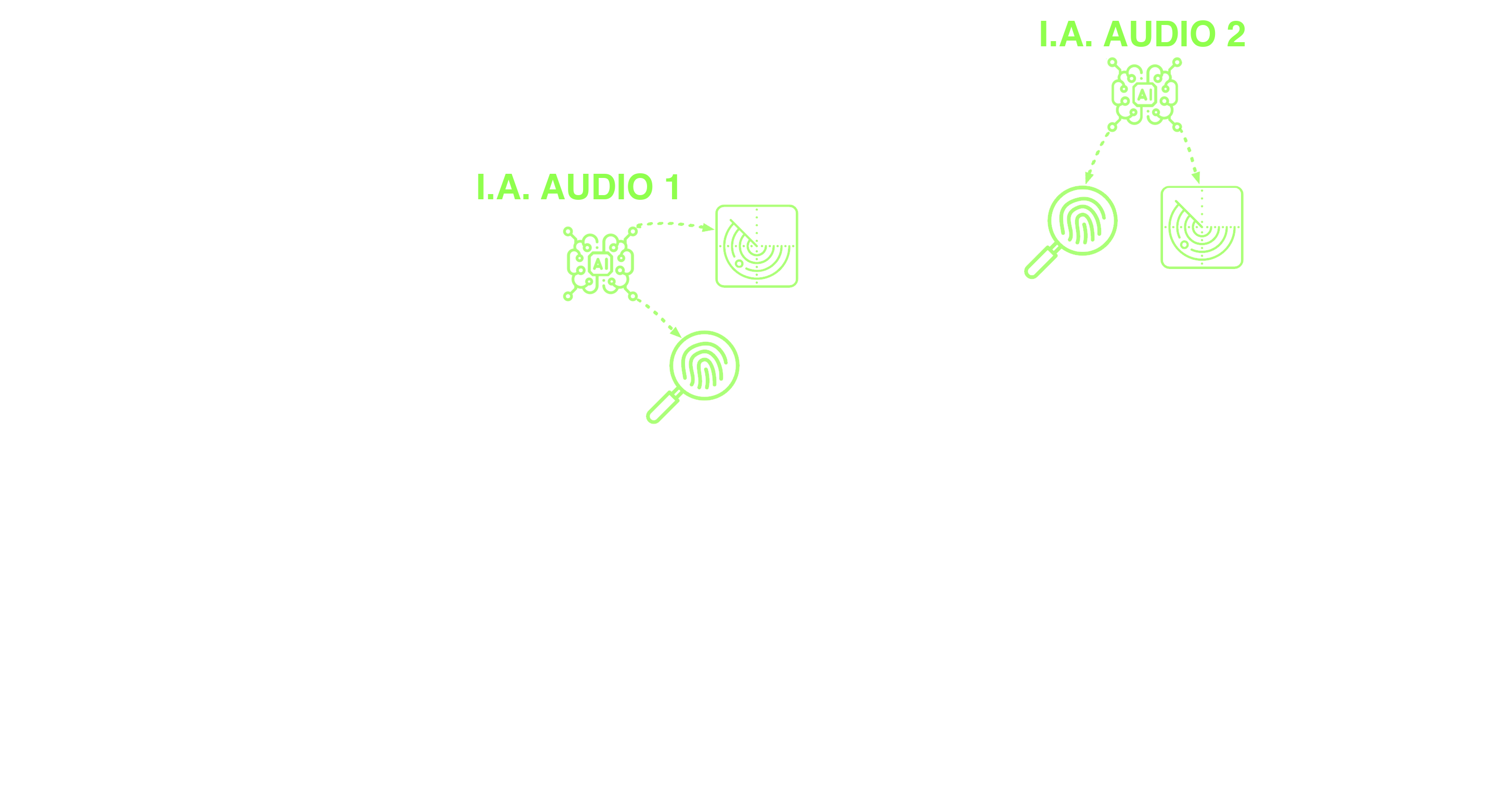

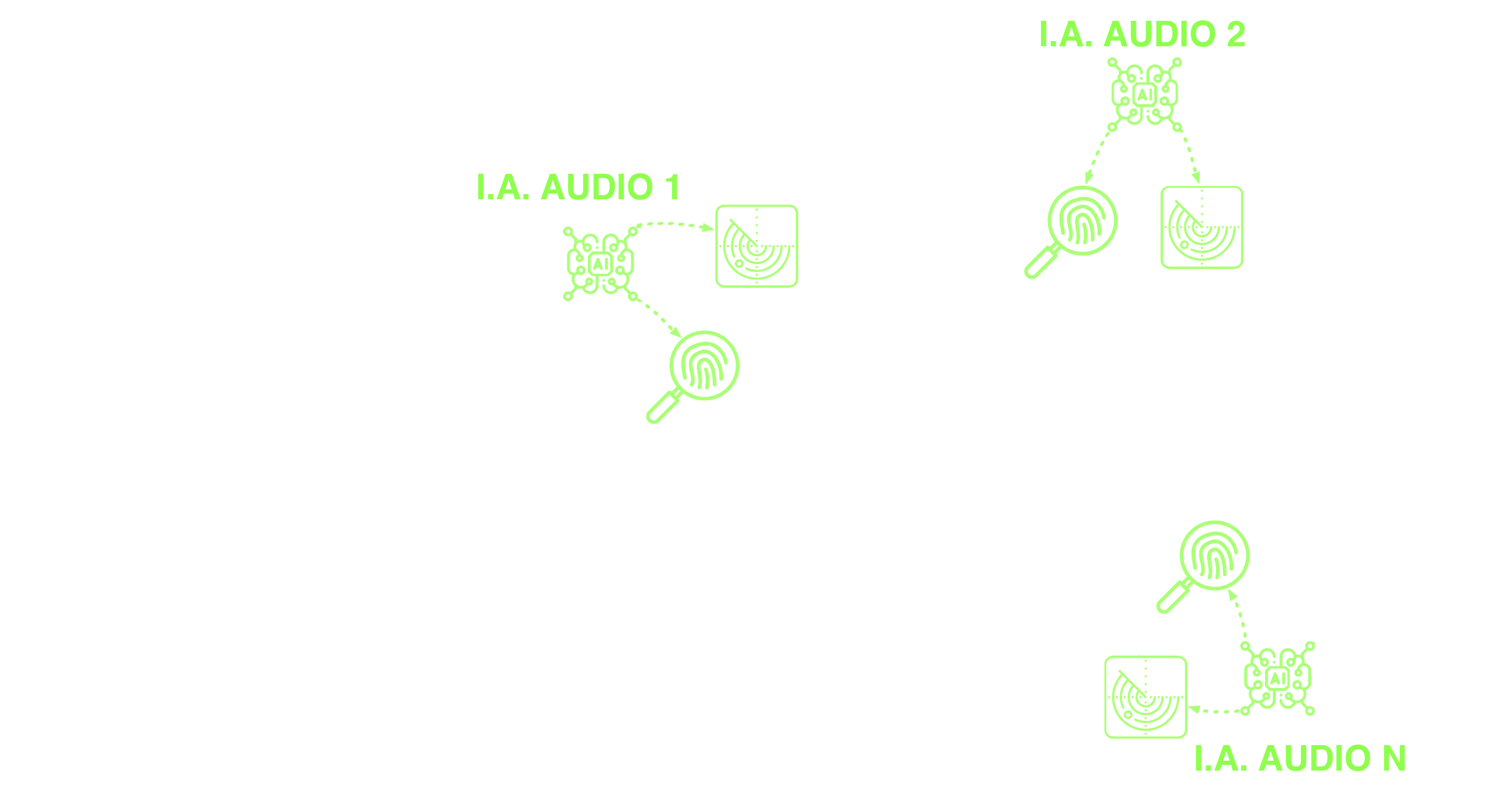

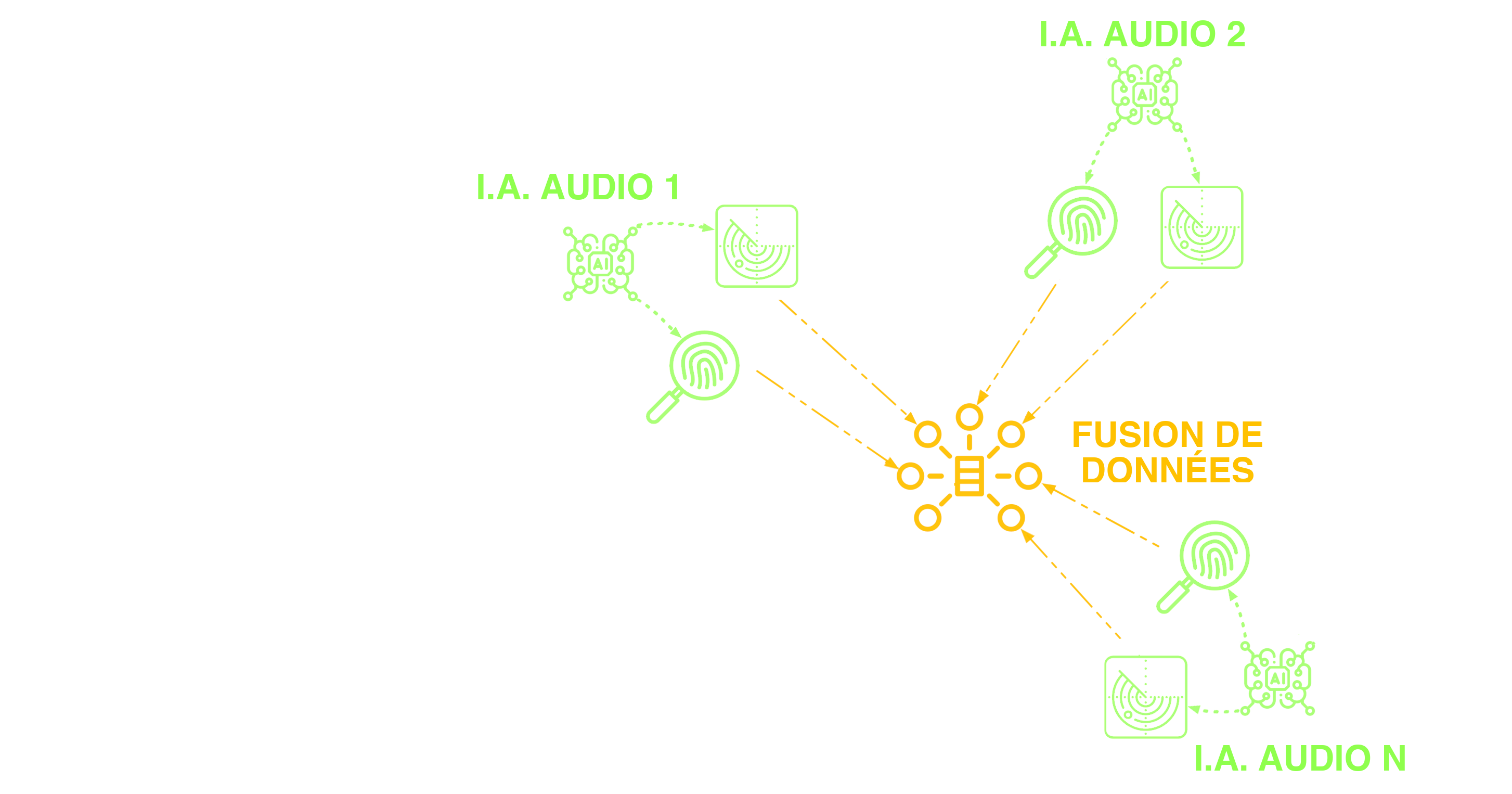

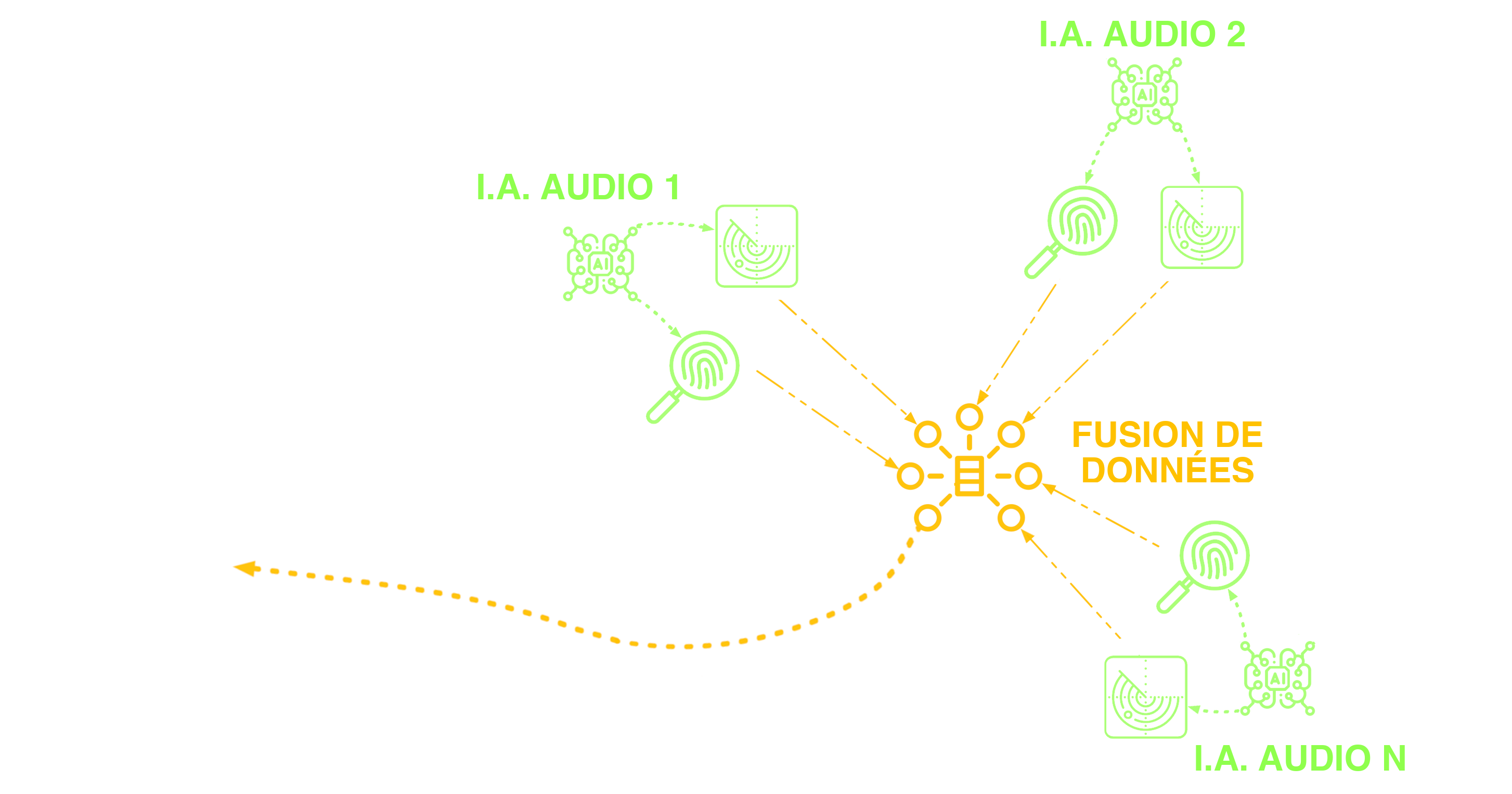

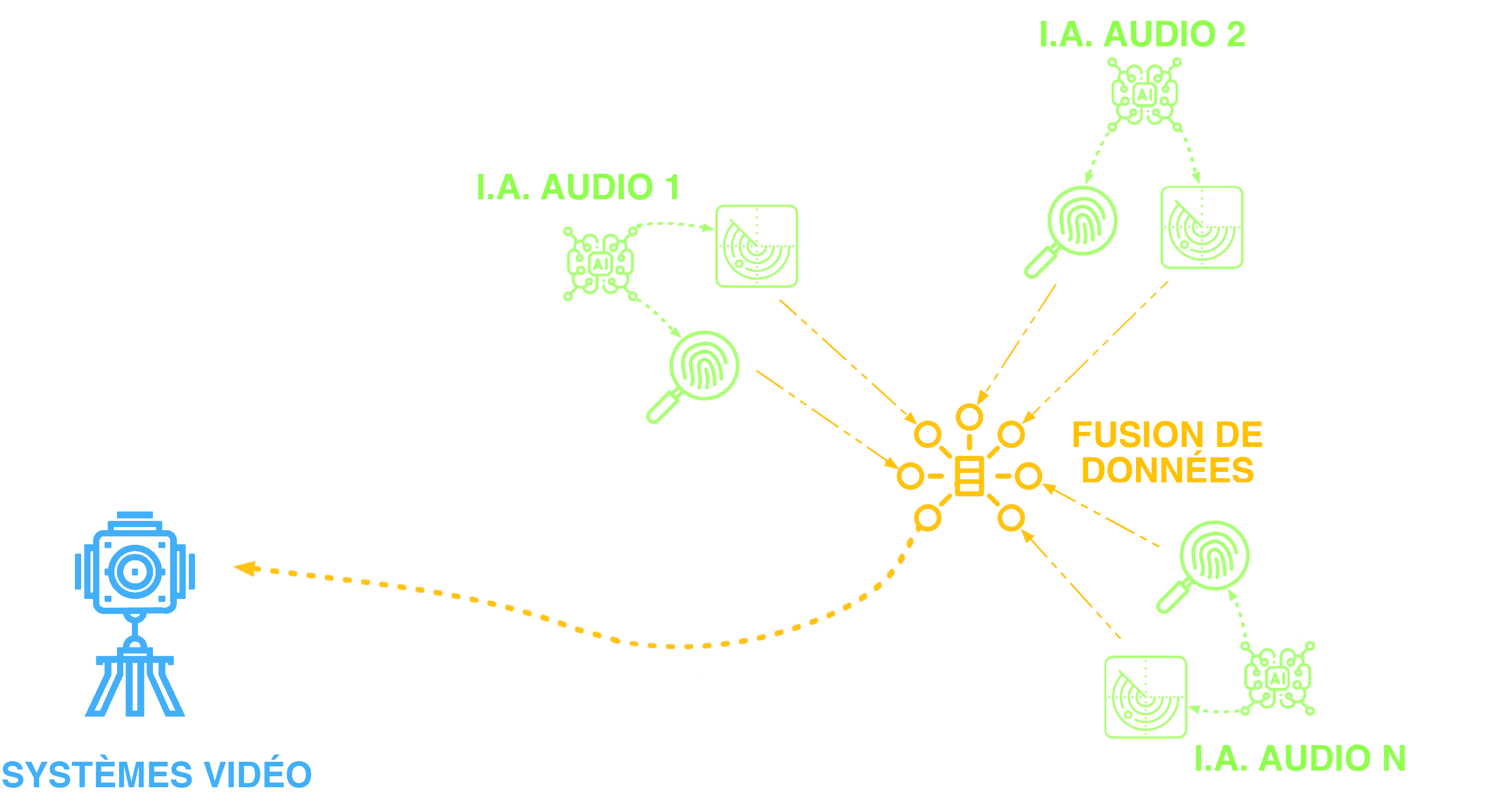

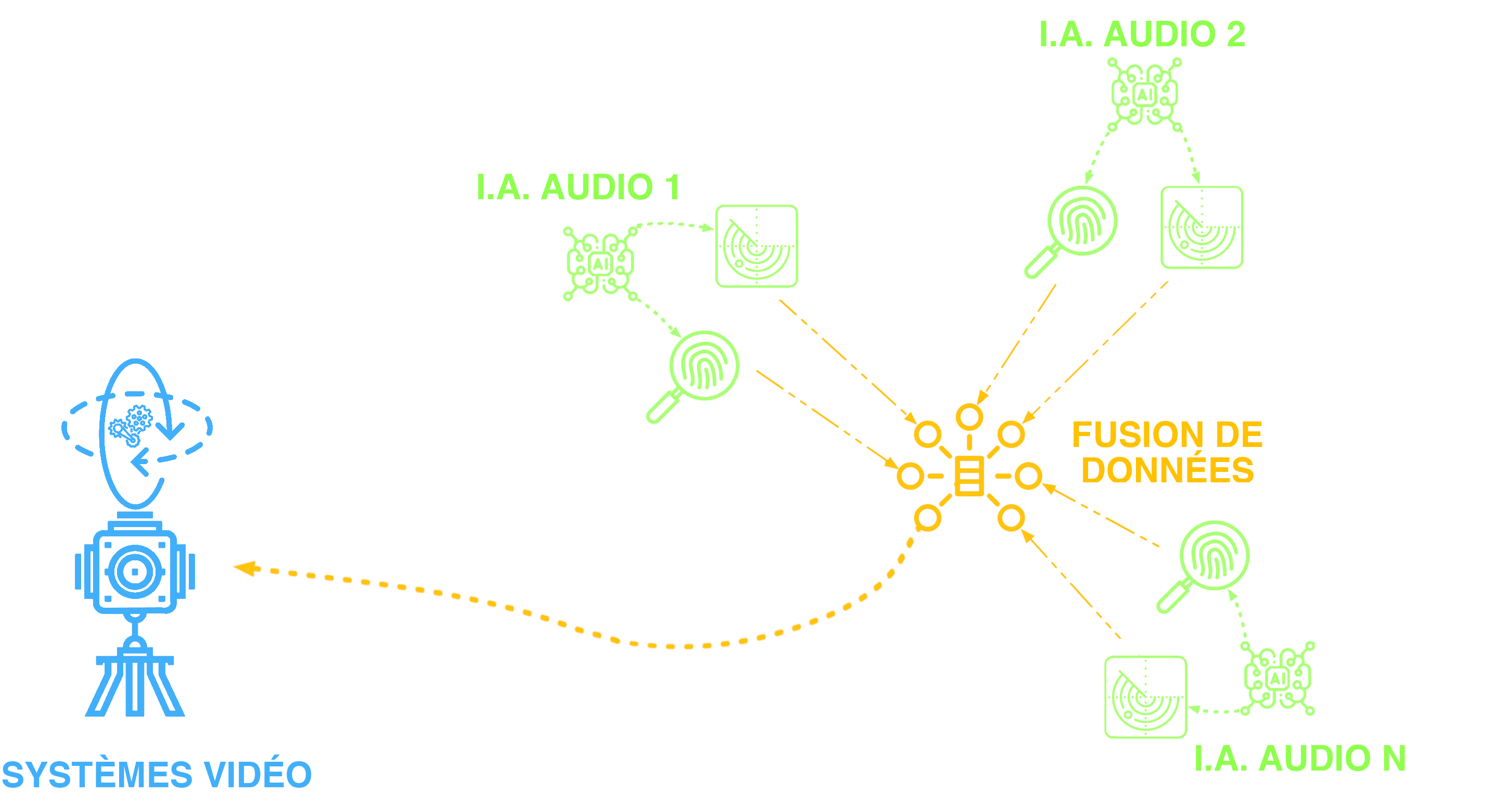

Data fusion :

automatic orientation of video systems

Data fusion :

automatic orientation of video systems

Data fusion :

automatic orientation of video systems

Data fusion :

automatic orientation of video systems

Data fusion :

automatic orientation of video systems

Data fusion :

automatic orientation of video systems

Data fusion :

automatic orientation of video systems

Data fusion :

automatic orientation of video systems

• Automation of cameras : visible, thermal and active imaging

Complementary optronic systems

Complementary optronic systems

• Night / Day / Smoke

• Active imaging SWIR : background removal

in difficult situations

Realtime drone Tracking and recognition using video deep learning

Scan me !